Human-centered design for mental well-being

Everywhere, yes. The topic of Artificial Intelligence is a wave that everyone wants to surf, even if they don’t know how to do it. It is a technology that has marked a historical milestone and that drives us to see how to implement it, either to feel “modern” or to achieve what is considered the true objective of AI: optimize.

Optimize protocols, time and margin of error. In the case of mental health, AI can help diagnose, treat or intervene. Is it a threat to the health professional? I don’t believe it. In addition to ethical issues, cybersecurity, lack of diversity in data analysis, cultural sensitivity, and language barriers remain concerns when implementing this technological vision in mental health care. Considering that these sensitive problems require empathy, human connections and holistic, personalized and multidisciplinary approaches, it is imperative to explore these aspects.

The role of UX and design in creating conversational AI for mental health

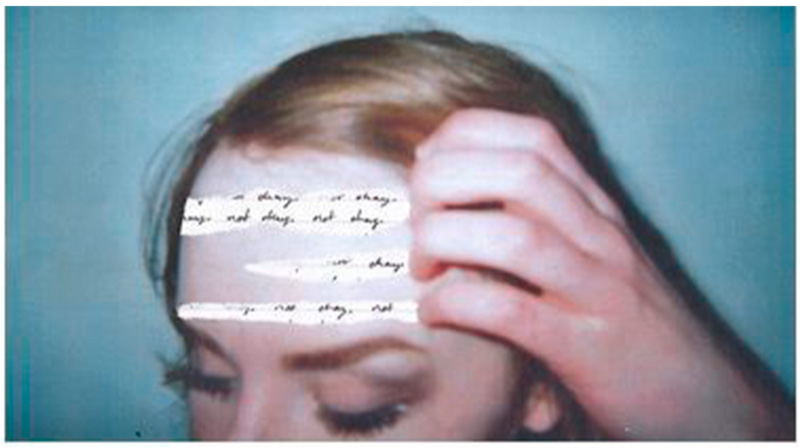

Alex feels anxious because he doesn’t feel secure in his romantic relationship. Ana feels overwhelmed by the loss of her job. Experiences of unresolved mental pain are very common in the modern world.

To make matters worse, we are particularly adept at distracting ourselves from mental pain. Current systems to support us through such challenges are absent or inadequate for care seekers, caregivers, and their broader communities.

The world is facing two major mental health crises: a crisis of purpose and a crisis of affect. By recognizing this, we can more easily build systems of change that foster awareness, receptivity, and gratitude, reinforcing all of this in a virtuous cycle of positive action.

But this world is also facing a technological transformation with a futuristic approach: we are talking about AI in the field of providing mental health care to a population group that has made the decision to start, or better yet, to approach a conversation. Therapy through online applications that engage in conversations that promise compassion and “listening.” This is achieved through an interface that, in the UX/UI scenario, must have in my concept and at least a focus on HCD ⟶

How can human-centered design be applied to improve mental well-being?

01/ Users must be investigated deeply.

- What they need and what keeps them up at night : Conduct interviews, surveys and focus groups with people experiencing mental health problems. Explore your anxieties, preferred communication styles, and what features you find most useful.

- Be careful with user safety : mental health is a delicate topic and full of taboos. Integrate security protocols to identify crisis situations and offer appropriate resources.

- Embrace diversity – Consider the needs of diverse cultural and demographic backgrounds. Language options and communication styles must be offered to ensure inclusion.

02/ Collaborative design is essential.

- Engage mental health experts : Although designers joke that they are almost psychologists when trying to decipher what a client wants, it is imperative to partner with real psychologists, therapists and mental health professionals to ensure that AI interventions and techniques are evidence-based and effective.

- User Testing and Feedback – During development, conduct user testing sessions to gather feedback on the effectiveness, tone, and user interface of the AI. These tests are where the design is perfected based on the users’ knowledge to obtain a more positive and useful experience.

03/ Easy-to-use interface.

Imagine a user with anxiety problems who is encouraged to interact with your application. What should be ideal within the construction of the experience?

- Simple and intuitive – The interface should be easy to navigate, even for users with limited technical experience. Prioritize clear instructions and an orderly design.

- Accessibility features – Integrate features such as screen reader support, adjustable text size, and voice control options to ensure accessibility for users with disabilities.

- Personalization – Allow users to personalize their experience by setting language preferences, communication style, and the types of support offered (relaxation techniques, journaling prompts, etc.).

We must remember that we are entering the era of “hyper-personalization”, and much more driven by AI.

04/ Ethical considerations.

- Transparency and user control : Be transparent about the limitations and capabilities of AI. Users should always be in control of the conversation and have the option to cancel it at any time.

- Data Privacy – Ensure that user data is collected, stored and used ethically, in accordance with the data privacy regulations you will be handling. Also remember that AI learns from data and analyzes various sets of information and predicts the patterns associated with various mental health problems.

- Focus on empowerment : The goal of AI should be to empower users, not replace human therapists. Encourage users to seek professional help when necessary. Since AI still lacks what characterizes human beings in a certain way: empathy and emotional connection.

Conversational AI for mental health: a new era of support

Conversational AI, powered by advanced algorithms and natural language processing, is revolutionizing the way we approach mental health care. These AI systems, designed to simulate human-like interactions, provide unprecedented levels of accessibility and convenience for people seeking mental health support. Whether through chatbots, virtual therapists, or AI-powered mental health platforms, conversational AI is making mental health care more accessible and less stigmatized.

Conversational AI for mental health can take different forms,

but they all share some common characteristics:

Interface

- Text-based chat – This is the most common format, allowing users to type messages and receive responses from AI.

- Voice Chat – Some AI tools can use speech recognition to achieve a more natural conversation flow.

- Interactive elements : Certain apps may incorporate interactive exercises, mood trackers, or journaling prompts to enhance the experience.

Conversation style

- Supportive and encouraging – AI uses positive and empathetic language to create a safe and supportive space.

- Open-ended questions – AI asks questions that prompt users to explore their thoughts and feelings.

- Active listening – AI analyzes user responses to adapt and provide relevant advice.

Below is an example of a brief interaction with a conversational AI for mental health:

User : I’ve been feeling very stressed lately.

AI : Hello, I’m sorry you’re feeling stressed. Can you tell me a little more about what is causing you stress?

User : Work deadlines are approaching and I’m having trouble sleeping.

AI : It sounds like you have a lot on your plate. Have you tried any relaxation techniques before going to bed?

User : No, not really.

AI : Would you be interested in learning some simple breathing exercises that could help you fall asleep more easily?

By focusing on these aspects of UX, conversational AI for mental health can become a valuable tool to promote emotional well-being and make mental health support more accessible from the object most used by humans today: cell phone.

The benefits of conversational AI on mental health

Integrating conversational AI into mental health care brings numerous benefits, improving both the accessibility and quality of care:

- 24/7 Availability – AI mental health tools are accessible at any time and offer support when needed.

- Anonymity and reduced stigma : Users can seek help without fear of being judged, promoting openness and honesty.

- Cost-effective – AI therapy can be more affordable than traditional therapy sessions, improving mental health.

Is it for this reason that young people, for the most part, turn to these AI conversation services to talk about their mental health problems?